Introduction

As AI and machine learning systems advance and integrate into policing and law enforcement, they raise concerns about ethical usage. In the realm of predictive policing AI, while these technologies make significant progress, the legal and regulatory frameworks only seem to fall further behind. Additionally, domestic and international policing bodies differ in their approaches to implementing artificial intelligence systems. The fundamental issue that predictive policing AI, as well as AI in general, brings to light is how data collection is accomplished. Governmental and law enforcement bodies play a pivotal role in either mitigating bias in training data to create an objective and impartial model or exacerbating and deepening bias, which can have adverse impacts on specific population segments. Notably, the Chinese government’s Integrated Joint Operations Platform (IJOP), a new AI-driven policing system, pursues the latter strategy, prompting international concerns about potential exploitation and targeting of minority populations like the Chinese Uighurs. With the integration of these powerful technologies growing, Praescient Analytics and our team continue to leverage our experience in implementing AI systems across national security and defense organizations to pioneer thoughtful application of these tools through proper data management and explainability.

Transparency and Fairness within the IJOP

Looking at The Integrated Joint Operations Platform (IJOP), this serves as an important example that highlights the significance of considering transparency, accountability, and fairness during the implementation of predictive policing systems. The IJOP AI utilizes data fusion, geolocation, and data tracking techniques for monitoring purposes. However, during its use, concerns of embedded biases within the system have been raised, as it could lead to unfair targeting and discriminatory practices. On one hand, the IJOP technical granular activity alert capabilities enable Chinese authorities to be very proactive in preventing activities they deem illegal, however on the other hand the power and data behind the alerts can flag innocent activities that are unrelated to law breaking. The best example of this being specifically within the Uyghur Muslim population, as the flagging of innocent activities or affiliations is shown to be disproportionally high.

Keeping the System and User in Check: Data Management in AI

When it comes to addressing the concerns related to the alert and targeting systems used in the IJOP, the main issue revolves around bias. This includes biases embedded within the AI system itself as well as biases that may arise from the individuals using the program. To mitigate these concerns, data management systems can provide an effective solution. Not only reducing biases within the system through the checks and balances it puts in place for itself, but also biases of its users, Microsoft Purview serving as a prime example in this regard. Using Purview’s sensitivity classifications, for example, law enforcement agencies can actively mitigate the potential risk of innocent activities or affiliations triggering alerts within the system. Purview’s data governance capabilities enable agencies to establish robust processes for evaluating the sources and quality of data used in the predictive models. This ensures the identification and removal of biased or discriminatory data sources, thereby reducing the likelihood of false positives or unwarranted targeting of individuals based on innocent activities or affiliations. Additionally, Purview’s transparency features empower agencies to track and document the origin and lineage of data, facilitating audits and accountability checks that uphold fair and ethical use of information.

Showing Your Work: Explainability in AI

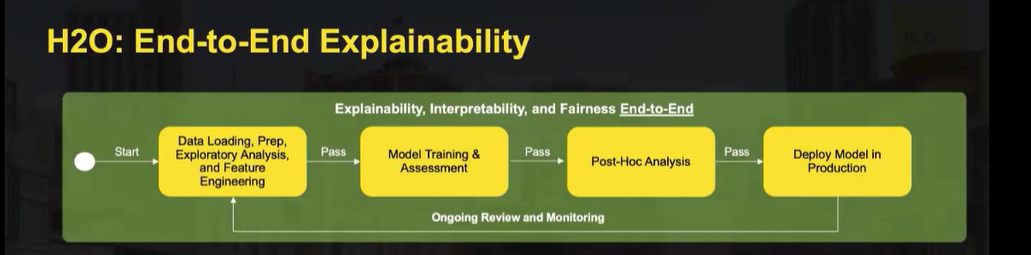

Another critical tool in implementation of predictive policing AI is explainability. Explainability is a valuable tool in combating user or machine biases as it clearly articulates the why and how behind the decisions or predictions it is making. An example of this would be the AI platform H2O.ai, which prioritizes explainability to mitigate bias, unfairness, and other potential issues in predictive policing AI. By incorporating H2O.ai’s explainability features, organizations gain insights into the factors driving model predictions. This transparency allows for thorough scrutiny of system operations, reducing the risks of biased outcomes and unfair practices. H2O.ai’s capabilities empower users to proactively identify and rectify biases in data and algorithms, fostering fairness, accountability, and public trust.

Conclusion

In conclusion, while the Chinese government’s use of predictive policing through the Integrated Joint Operations Platform demonstrates just how effective and advanced these technologies can be, it also represents the importance of safeguards to mitigate bias and misuse and explainability. Systems like Purview and H2Oai are instrumental in achieving this goal. Praescient Analytics, a pioneering organization, utilizes such tools to enhance the ethical use of AI. By embracing responsible data practices, implementing safeguards, and leveraging advanced technologies, the law enforcement community can harness the benefits of predictive policing while upholding ethical standards and safeguarding individual rights.