Open Source Intelligence is a rapidly growing analytical discipline linked to the rapidly expanding realm of the internet. With the rapid growth of Web 2.0, web sites that focus on user generated content (Tim O’Reilly, 2005), and the constant desire for the coolest social media apps and data distribution tools, the web becomes a virtual gold mine of information on individuals, social groups, companies, and numerous other data types. While the sheer scope of OSINT data types (in both content and metadata), and the rapid growth of new technologies creates opportunities for analysts; it also creates numerous challenges for technologist to develop the tools to read and store that data, let alone make it searchable for the analysts in a useable form.

The National Defense Authorization Act for Fiscal Year 2006 states, “Open-source intelligence (OSINT) is intelligence that is produced from publicly available information and is collected, exploited, and disseminated in a timely manner to an appropriate audience for the purpose of addressing a specific intelligence requirement” (109th Congress, 2006).

OSINT is frequently misunderstood as only coming from social media sites, (i.e. Social Media Intelligence SOCMINT). But OSINT also includes other sources like public speeches, public documents (digital and hardcopy), public broadcasts and commercial websites. The data can be acquired directly from the source, or though data aggregators or feed services such as Lexis Nexis (http://www.lexisnexis.com) and Twitter’s Firehose service (https://dev.twitter.com/streaming/firehose).

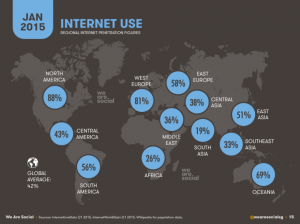

One of the major components driving the growth of OSINT and Web 2.0 is the availability of communications devices connected to the internet in just about any location in the world (Simon Kemp, 2015).

As of August 2015, ‘WeAreSocial.net’ estimates that the world now has 7.529 billion active mobile subscriptions, which is more than the estimated world population of 7.357 billion people (Simon Kemp, 2015). While not every mobile handset has a connection to the internet, 41.7% of the world’s population has access to the internet, and over 81% of social media users access their accounts via mobile devices.

The growth of internet access and new web technologies is enabling formerly disconnected groups to access an ever increasing amount of new information and ideas with little effort. These same resources are enabling others to spread their ideas across an almost limitless internet space with little or no cost. With web tools like Facebook (www.Facebook.com) and WordPress (www.WordPress.com), even a young kid can broadcast information about their 16th birthday party, or a school bake sale to hundreds or even millions of users with a simple post. On the other side of the coin, Bad Actors (i.e. persons intentionally causing disruption) are using similar tools to broadcast their extremist ideologies such as Al Qaeda’s online “Inspire” magazine, or coordinate the sale of illicit materials like those available on the “Silk Road” and “Black Market Reloaded” websites. In addition to the anonymity available on the internet, the internet also spawned a new currency called ‘Bitcoin’ (https://bitcoin.org/en/) that enabled users to remain anonymous while paying for all manner of goods and services available over the internet. Here are some of the positive uses of social media tools;

- Banking industry – due diligence investigations

- Law Enforcement – finding child predators

- Defense industry – population sentiment in operational areas

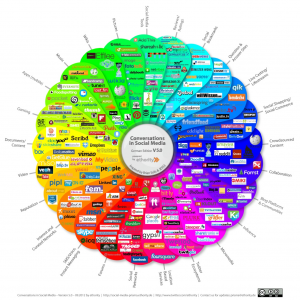

As the number of internet users grows, so do the uses and the desires for new capabilities. A recent search using Google identified 667 million ‘hits’ for the term “Social Media Tools”. While a similar search for “OSINT Tools” only generated 118 thousand hits. That’s a ratio of 5,652:1, demonstrating the disparity of the plethora of information on how to broadcast social media data, but comparatively very little information exists on how to capture and analyze that data. In just looking at the SOCMINT area of OSINT, the analyst can quickly get overwhelmed with the numerous data sources and disparate data types provided by each of the tools. Many of the tools that are designed to broadcast social media information also have components that can be used to monitor other data generators, as well as their competitors.

As seen in the “Social Media Prism” (Ethority, 2014) diagram below, Social Media covers numerous categories of tools beyond the ‘like and share’ type tools that many people are familiar with.

With each social media site or application there are at least 2 layers of data to be considered for analysis. First is the content layer, the information ‘posted’ by the user. Posts can range in complexity from a simple text message of just a few characters, to being as complicated as an embedded video recorded on a mobile phone. An additional complication is that the content layer data may not be in the analyst native language. With the globalization of social media tools it is common to find not only regionalized applications such as “Cloob” (www.cloob.com) in Iran, whereas sites like Facebook are truly global because they can handle numerous languages thanks to their ability to store and display Unicode character sets. The second data layer is called the metadata layer, the ‘background’ information about the posts. Metadata can point to information about the device posting the information, the user, or even descriptors of the file itself. A good example of metadata would be the EXIF (Exchangeable Image File Format) data contained within a video or picture that describes the device and settings in which the image was captured. Depending on the device that was used to take the picture, metadata for the date, time and location may also be embedded in the metadata layer for the image. Data from both the content and the metadata layers can provide opportunities for intelligence linkages and insights about the user or organizations associated with the files and social media accounts. Sometimes the application itself through metadata provides insights about the user such as name, age, and location. Many times this information is transmitted or embedded without any effort on the part of the user.

All of this information has to be; collected, stored in original language, indexed, translated, and made available for the analyst to review with numerous toolsets. In the ‘Social Media Prism’ diagram there are over 280 different social media tools and sites listed. It is fairly easy with today’s technologies to create a basic database to store common information about the user(s) of the sites (User name, last log-on, friends/associates, group memberships, etc.). It is a totally different challenge trying to collect the data from the sites. Using the Social Media Prism example, there may be a need to use 280 different types of data collectors to gather the information from the sites. The IT industry has in general settled on a few types of standards for data access, but not each company or product implements those standards equally, or to the full extent. Some social media sites and tools have an API (Application Programming Interface) that enables developers to access the sites data, such as Facebook’s “Graph API”. Others do not have an ‘outward facing back end connection’, so a developer would need to ‘scrape’ the data using the same UI (user interface) as the logged in user. While the first option can be very quick, it may only provide access to a small subset of data the analyst is looking for. While the scraping approach can be network and time intensive, it may enable the capture of more data points the analyst wants and go well beyond what the vendors API would have provided.

After the data is ‘captured’ from the source it has to be stored. Technologists have to plan for a wide disparity of data types that can be recovered from social media sources. A simple test based database is easy to build, but social media is a much richer set of data than just text. To be successful when designing a social media storage system, a few unique questions have to be answered;

- What data types will be stored (text/audio/images/videos)?

- Will the data be stored in the original format (forensically sound) or will it be transformed before storage (easier indexing with smaller storage)?

- If the data is stored in original formats, will the ‘translation service’ be on an as needed basis, or will a translated cop be placed in another data field to be indexed? If not, does the analyst have the tools necessary to query the data in the original language(s)?

- Not all data gathered is unique. How will the system handle duplicate content data, even when the metadata is different?

- Can the content and metadata layer data points both be queried?

- How will non-text data be handled? Will machine vision algorithms be used to ‘look’ at images and videos to index objects within them, or will a user need to provide a short synopsis of what they saw? With today’s technologies, machine vision may be quick, while the human review may be more accurate in listing the objects from a small search list.

All of these questions help shape the scope of the social media repository. The traditional factors such as storage space, connectivity, and speed all still apply. At any time these infrastructure factors may override the social media special factors listed above. For example, if the data store is limited to 1 Terabyte (TB) of data, it will likely be impossible to store large numbers of still images or videos from a social media site. A work around might be to have a referential link to the original location of the images and hope that it is still there when the analyst follows the link provided. Youtube.com states that over 300 hours of video are uploaded to it site every minute. This would fill up the example 1TB database in about 13 minutes (based on 4 hours standard definition video per Gigabyte of storage).

Making the data readable and searchable for the analyst is another major challenge for OSINT. Numerous sources indicate that the US Government was spending approximately $1.6 billion dollars a year on translation services (US Government Accountability Office, 2013). A large portion of those expenses are for traditional interpreter services. But agencies still invest heavily in human based translation services because many of the automated tools do not cover all the necessary languages at a high enough quality, or cannot accept the numerous types of input (written, typed, spoken, images, colloquialisms) available through OSINT and other data sources. As in many arenas, automation may be fast for a small set of data types, it may not be as accurate or as flexible as human translators.

While some of the OSINT technical challenges have been highlighted already, what has not been discussed are the policy issues. Data on the internet may have varying ‘fair use’ rules. Some of the information available OSINT tools may be copyrighted or trademarked. Just because the tool can capture it, does not mean it is allowed. This particular issue can be a challenge when dealing with international partners. While pulling data from overseas social media sites may not violate US Intelligence Oversight rules, it may violate international agreements depending on what data is collected, and what is used for. On the dissemination side Social media can be a major Operational Security (OPSEC) challenge. A soldier may take a picture of themselves during an exercise in Belarus. But metadata could show them actually being in Crimea. This could unintentionally defeat a military’s complex deception plan with a single misplaced social media post. An employee at a factory might take a picture of the assembly line at their new job, but inadvertently share images of the new ‘XyZ Industries’ device that will go on sale next spring. Now XyZ Industries competitors have insight into the next generation product from XyZ Industries. This could potentially cost XyZ Industries millions in lost profits.

OSINT and SOCMINT are just category names for the tools and methods used to monitor an ever changing global internet. Open Source and Social media data can be hard to capture and manage, let alone validate the information as reliable. Yet OSINT’s ability to be gathered without direct knowledge of the source(s), and the large breadth of placement and access of those sources can be immensely valuable. In some cases OSINT may provide indicators and Warnings (I&W) of population or industry groups well in advance of other traditional sources. If one can successfully navigate the unique challenges of the OSINT world and maintain access to the ever growing number of cutting edge sites and applications, OSINT could truly provide a gold mine of information for analysts in any industry.

Bibliography

109th Congress. (2006). PUBLIC LAW 109–163 – National Defense Authorization Act for Fiscal Year 2006. Washington D.C.: US Government.

Brinker, S. (2015, January 01). www.chiefmartec.com. Retrieved October 22, 2015, from chiefmartec.com: http://chiefmartec.com/2015/01/marketing-technology-landscape-supergraphic-2015/

Ethority. (2014, June 13). Social Media Prism. Retrieved October 21, 2015, from Social Media Prism: http://ethority.net/weblog/social-media-prisma/

Simon Kemp. (2015, August 3). Global Digital Statshot: August 2015. Retrieved October 21, 2015, from We Are Social: http://wearesocial.sg/blog/2015/01/digital-social-mobile-2015/

Tim O’Reilly. (2005, 09 30). Retrieved 10 21, 2015, from Oreilly.com: http://www.oreilly.com/pub/a/web2/archive/what-is-web-20.html

US Government Accountability Office. (2013, February 25). Defense Contracting: Actions Needed to GAO-13-251R – Explore Additional Opportunities to Gain Efficiencies in Acquiring Foreign Language Support. Retrieved October 21, 2015, from US Government Accountability Office: http://www.gao.gov/products/GAO-13-251R